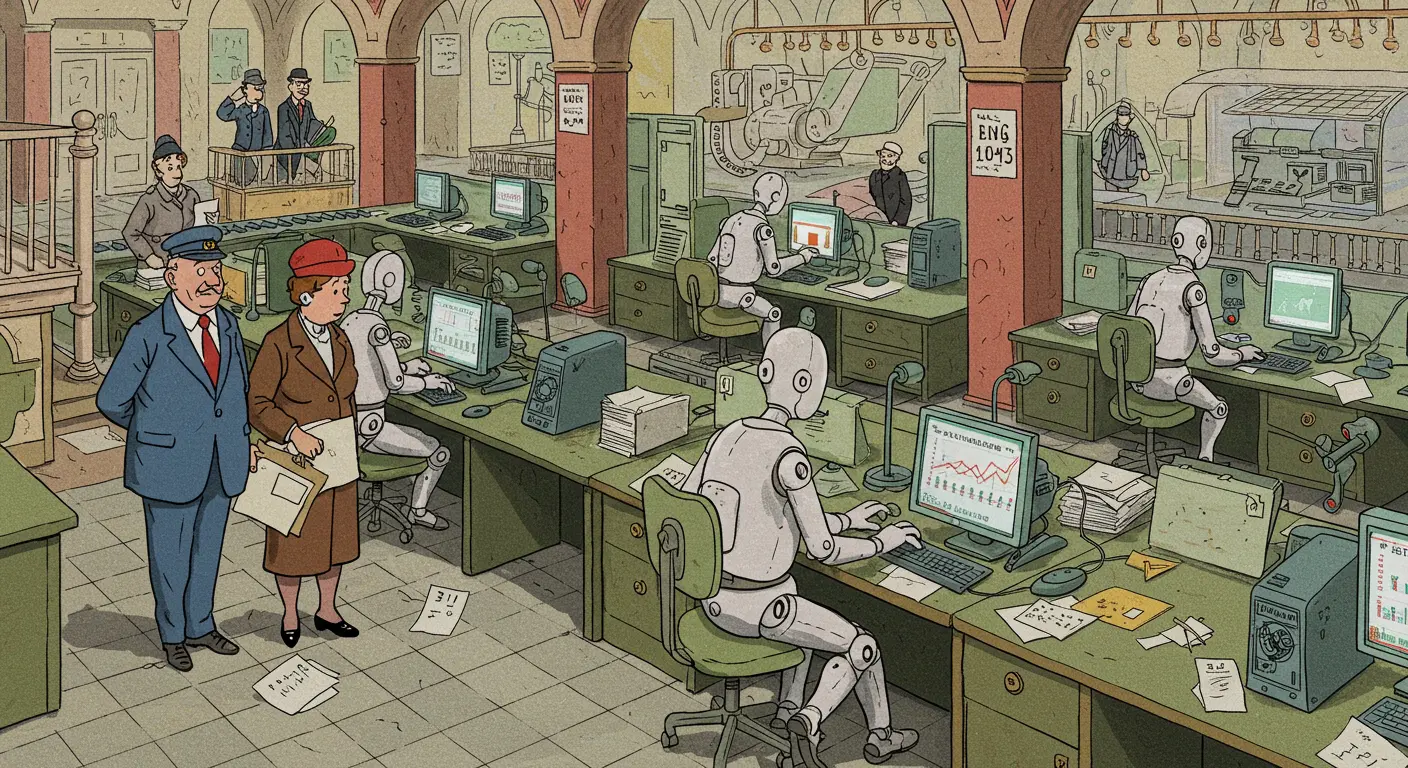

I’ve been thinking a lot about efficiency in the public sector recently. This post looks at ideas for increasing the efficiency of analysis and operations through automation, good coding practices, artificial intelligence, and, well, (meta-?) analysis. This is the second post in this series; see the previous post for ideas on efficiency related to communication and co-ordination.

What is meant by analysis and operations?

What does it mean to make analysis more efficient? It is to produce analysis faster, cheaper, better, or in higher volume. Automation is key to doing this. And I’m thinking here of both ad hoc analysis and production analysis (eg regularly published statistics). Before we hit the ideas, it might help to give examples of these two types of analysis.

A good example of ad hoc analysis would be if, say, a global event meant that a lot more analysis was suddenly required on trade and trade links, typically in the form of answers to one-off questions. With such ad hoc analysis, you don’t know the specific question in advance—but you might know that, from time-to-time, questions about trade links will arise. So to improve the productivity of analytical operations in this case, you would want analysts or data scientists or economists, whoever it is, to have longitudinal data on bilateral trade flows to hand, with their permissions to access it cleared ahead of time, the data cleaned, the data stored in a sensible format, and the data accessible to suitable analytical tools. Essentially, it’s about putting analysts in the best position to answer the questions in a timely way as they arise, maximising agility and minimising repeated effort for when the next question lands.

An example of regular analysis would be a pipeline that ends in a publication and which involves data ingestion, data checking, processing, and publication of data alongside a report that has a fairly consistent structure. Perhaps this process involves a lot of manual steps, like handovers, emails, someone staring at a spreadsheet, and someone having to serve requests for custom cuts of the data. Improving productivity might look like automating the end-to-end pipeline, cutting out the manual steps, and, finally, serving the data up via an API so that people can take whatever cuts they want. It’s again minimising effort.

Turning to operations now; in every public sector institution, there are thousands of actions that are repeated every day by most staff. Something as basic as turning on a laptop or checking a calendar. If some of the tasks that happen repeatedly can be sped up even a little, then there’s a large saving to be made. This does require measuring how fast those tasks are today and, to get an idea of what the return on investment (ROI) might be, it also requires one to have a counter-factual in mind of how fast they could be with that investment.

With that background out of the way, let’s move on to some ideas!

Operations

More productive capital

I touched on computer-task time savings in the context of better hardware in this post on the false economy of bad IT. TL;DR it always makes sense to buy staff earning over the median UK salary a high-spec laptop if they lose more than 9 minutes a day due to a low- to mid-range laptop being slow or glitchy. As an aside, the UK has relatively low capital stock, as seen in Figure 1 (chart by Tera Allas).

There’s a whole class of repeated tasks that people do on computers that are slower than they could be because of the computer’s hardware or configuration. How can the productivity (loss) be definitively tested? One option is a log of the times when someone’s workflow was interrupted because, say, the computer was turning on, or froze, or was opening an application. Another would be to measure the time it takes to do similar tasks in a perfect reference situation, eg the same task on a high-spec laptop, and use the time that took as the counterfactual. Similarly, one could measure productivity1 with one software package relative to another to more accurately assess which is better (a lot of software will be chosen for reasons that are orthogonal to productivity, for reasons I sketch out in this other post.)

1 I very purposefully say ‘productivity’ and not just ‘time’ here because the most expensive input to the production function is usually peoples’ time, not the time it takes software to run. Which is why data science only reaches for C++ and Rust, which are slower to write code in but run faster, when it’s absolutely necessary.

Now you might say, “ah, but you haven’t done a fair test—that low- to mid-range institutional laptop has lots of important extra security on it that the high-end reference laptop doesn’t.” You’re right, ideally one would do a like for like comparison where you would only change one feature at a time. Pragmatically, though, I would see it as a feature, not a bug—use it as a way to see the overall trade-off that’s being made between security + cost vs productivity.

Regardless of how you do it, once bottlenecks in productive workflows have been identified and assessed, investment resources can focus on improving them in the order of biggest benefit.

A partial alternative to going high-spec is to allow Bring Your Own Device (BYOD). This allows the institution’s data and software to be accessed via the personal devices of staff, which may be faster and may be more suited to staff (by selection). There are security issues around this, and it may not be a equitable solution—more junior staff may not be able to afford the same tech as those at the top of the organisation. Still, if it doesn’t make sense to stump up for a high-spec laptop for everyone this can be a great second option. One particularly secure combination that will be relevant to many public sector institutions is Apple hardware + iOS as an operating system + Microsoft2 software, which allows people to use corporate account Outlook, Teams, Sharepoint, etc, etc, on iPhone and iPad (including with a keyboard, as long as it’s an Apple one). My iPad has a processor that’s 40% faster than my work laptop’s, so BYOD is a game-changer. Of course, you can’t do everything from an iPad3—but you can certainly do a lot.

2 Throughout, I’ll be referring to whether something or another plays well with Microsoft software. This is not because I think the public sector should be using Microsoft for everything, far from it, but—as I like to say—‘nobody ever got fired for buying Microsoft’, and the reality is that it’s what most public sector institutions use. Key exceptions I’m aware of are the Munich government, the French Gendarmarie, who both use custom versions of Linux as part of their own efficiency drives, high security areas of many governments, some national laboratories, and of course computationally intensive systems.

3 For all practical purposes, you can’t code directly on an iPad in the way you would a laptop or desktop. Yet.

Free and open source software

Free and open source software (FOSS) definitely isn’t always more efficient, but my rule of thumb is if there is a widely-used and close free and open source substitute, it will be far more efficient to use that rather than proprietary software.

Let’s look at a really clear example: Matlab vs Python. The latter is free and open source, does almost everything the former does, does vastly more other things to boot, and can be used in a wider range of places. Now there are some tasks for which Python is not a close substitute, and they really do need Matlab. But for most people, leveraging the massive Python ecosystem is more efficient.

There are many examples where paying for proprietary software makes sense because there is no close FOSS substitute. I am a fan of Obsidian, the markdown-based note-taking app. One of the reasons I think it’s great is that you never lose control of your data or the format it’s in: all of your notes are just markdown files and the Obsidian app just gives a fancy way to edit and view that content. The Obsidian app happens to be free but it’s not open source. I would similarly say there’s no FOSS equivalent of the incredible integration of hardware and software that Apple has achieved with the Macbook. But, equally, there are plenty of areas where direct FOSS substitutes do exist.

In my view, the high efficiency of widely-used FOSS comes through a bunch of channels:

- The ‘F’ stands for free! Productivity is output per unit of input, so free is pretty good.4

- Widely-used open source software is less likely to have critical security flaws.5

- Again because it’s open source, you actually know what it’s doing!

- Very much related to the above, and somewhat counter-intuitively, it’s more private. You can see for yourself if there’s a backdoor. A proprietary software vendor knows, at the very least, that you bought the software: a maintainer of a FOSS package may not even know you exist.

- By dint of being free and widely used, there are many ideas for how to improve it, and guides on how to use it.

- You don’t get locked in to a proprietary software provider who can subsequently ratchet up the price or drop support for a critical feature.

- Missing a feature you’d like for your org in a specific library? If it’s reasonable and within-scope, you can probably get it added by the maintainers. Or your org can simply add it for themselves. Bloomberg is a good example of a company who has gone heavily down this route.

- There’s also a diversity, equality, and inclusion benefit. Lots of students, especially those from poorer backgrounds, can’t afford expensive proprietary software but can use FOSS instead (as a student, I used a version of LibreOffice). Those with more resources may have been able to use proprietary software before they start work, which gives them an edge when they land their first job; FOSS helps level the field. Similarly, if you’re trying to be a leading public sector institution and inspire other institutions around the world, you’ll go further if you use FOSS because it’s accessible in every country.

4 I can hear you saying that some FOSS is harder to use. Linux being harder to use than Windows is a good example, although that one is far less true today given the rise of user-friendly Linux distributions like Ubuntu. But if a piece of software is so much harder to use, I would argue it isn’t a close substitute!

5 If it did have security issues, they probably—but not certainly—would have already been exploited and fixed because it’s open. Call it the weak open source security efficiency hypothesis.

Operational efficiencies

AKA process efficiency. Computer tasks aren’t the only area where one could find operational efficiencies. There’s a whole set of efficiency gains that most organisations could find in their standard processes too. One example is around the permissions required to access data or install certain software, etc. In most public sector organisations I’ve observed, these have to be separately approved for each individual within a team. But another model would be to use team-level permissions—if someone joins Team A, it is assumed they will need access to a set of data used by Team A. This eliminates a lot of tedious time for managees and managers in requesting and approving, respectively, permissions. In another post in this series, I wrote about how presuming access and openness within the organisation could also provide a boost—in that case, no permissions are needed!

That’s just one example. But by analysing how staff have to spend their time to get things done, one could find many more—and again try to minimise them.

Data for analysis

Most public sector organisations, probably most organisations in general, have a legacy systems problem that makes analysing data (particularly big data) painful. There are also legitimate reasons why security is often tight around the analytical tools that most public sector orgs can use. Between these two, it can take analysts orders of magnitude longer to analyse data using an institutions’ systems than it would the same size dataset on their own laptop or cloud account or open system. Analysis would go faster and be able to do more if these datasets were available outside the security fence.

Additionally, there is strong evidence that releasing datasets into the wild has huge benefits (eg Landsat data being released for free by the US government was estimated to have generated 25 billion USD in 2023). You can think of the economic narrative like this: data are an input into lots of potential public goods. People wouldn’t pay for the data directly to make those public goods, as they don’t capture enough of the benefit themselves—but make the data free, and their private capture is enough for them to put the work in and generate public goods worth way more than the total revenue obtained by directly charging for the upstream data.

The form of those public goods may be especially beneficial for public sector institutions. You can think of situations where the public sector doesn’t have enough resources to perform all of the analysis it would ideally have. But, with the data out there, others might do the analysis—co-opting others to help with the overall analytical mission! Those other analysts might just be in another government department. (More on this idea of sharing as an efficiency in the previous post in this series.)

Releasing real data

The first and most simple response to these pressures and productivity-sapping systems is to simply publish the data publicly, at least for data that aren’t proprietary or sensitive. And perhaps we can all be a bit more radical about what is and is not sensitive. An obvious example is firm-level data: UK legislation currently prohibits the Office for National Statistics from publishing information on individual firms, even though Companies House, another part of the UK state, does publish information on individual firms’ balance sheets.

Having important data be available publicly means staff within an institution can use non-restricted tools to work with it, which would be a massive boost to productivity. It means that any public goods that are possible with it are much more likely to be created, unlocking the true value and, potentially, it means that more relevant analysis would be happening with it than otherwise would be the case. And, especially if the data are released via an API6, it means no more FOI requests or emails from other institutions—people can self-serve the cuts of the data that they need. The substantial time that public sector analysts spend serving Freedom of Information (FOI) and inter-departmental requests for data would simply no longer be needed for any data that are published.

6 It’s not that hard to build an API using modern cloud tools, by the way. Check out this post for an example.

Synthetic data

However, most datasets that are used within public sector institutions are proprietary or sensitive or both. They simply can’t be shared externally. But what if there was a way to share the data without sharing the data? Well, there is—it’s called synthetic data.

Synthetic data are artificially generated data that are made to resemble real-world data in some of their statistical properties but in a non-disclosive way. It has been used by the US Census Bureau. There are mathematical guarantees about how hard it is to reverse the process and get back to the original data (a bit like encryption.) And you can set how strong this encryption is, though there’s a trade-off with preserving aggregate statistical properties.

One way synthetic data could be beneficial is if it was released and people could still get accurate statistical aggregations from it. But I imagine that most institutions wouldn’t be super comfortable with this as some statistics will be accurate but others won’t be. You can imagine instead creating synthetic data with extremely strong protection and releasing that, and allowing people to develop with it. Once an analytical script developed on the synthetic data is ready, staff and even externals could submit it to be run on the sensitive data (on what is probably a more expensive computational setup.) The benefit of synthetic data is that the bottleneck around the sensitivities of the data and access to it via specialist secure platforms are removed for most of the development process (except the last stage.) From a science point of view, there are even some attractions to working blind to the actual results in this way—it’s a bit like pre-registering the analysis for a medical trial.

Of course, there is no free lunch—there is some work to do for the institutional data owner in generating and releasing the synthetic data, and to do it at scale probably needs cloud computing. And it’s not the easiest concept to explain to people who are less in the data science space either, so senior colleagues may struggle to understand the risks and benefits.

There are well-developed packages to work with synthetic data, for example to:

- generate synthetic data, eg Synthetic Data Vault and Synthetic Data SDK

- evaluate how close synthetically generated data are to the real underlying data, eg SDmetrics

- benchmark how quickly synthetic data are generated according to different methods

I was quite involved in synthetic data at the Office for National Statistics, running a team that tried to use it in practice. They did excellent work. If you’re really interested in using it in anger, you should reach out to ONS’ Data Science Campus. You can find a number of posts on the topic that are by my former colleagues here. They also have a recent data linkage package that uses synthetic data so that two public sector institutions can link data without seeing sensitive columns; you can find that here.

Fake data

Even if the risk appetite of your institution doesn’t extend to synthetic data, perhaps it would extend to entirely fake data? If you like this is an extreme form of synthetic data where the encryption cannot be reversed (as opposed to ‘cannot practically be reversed’ with synthetic data). Fake data have only the same data types (eg string) and forms (eg an email address) of original data but are otherwise completely and utterly made up. This means that fake data have all the benefits of synthetic data bar statistical aggregates being approximately similar to those that would have derived from the real data.

There are a number of packages that can generate fake data that are extensible and with which you can create schemas that produce an entire set of fake data in the style you want. For example, Faker:

from faker import Faker

fake = Faker()

fake.name()

# 'Lucy Cechtelar'

fake.address()

# '426 Jordy Lodge

# Cartwrightshire, SC 88120-6700'

fake.text()

# 'Sint velit eveniet. Rerum atque repellat voluptatem quia rerum. Numquam excepturi

# beatae sint laudantium consequatur. Magni occaecati itaque sint et sit tempore. Nesciunt

# amet quidem. Iusto deleniti cum autem ad quia aperiam.

# A consectetur quos aliquam. In iste aliquid et aut similique suscipit. Consequatur qui

# quaerat iste minus hic expedita. Consequuntur error magni et laboriosam. Aut aspernatur

# voluptatem sit aliquam. Dolores voluptatum est.

# Aut molestias et maxime. Fugit autem facilis quos vero. Eius quibusdam possimus est.

# Ea quaerat et quisquam. Deleniti sunt quam. Adipisci consequatur id in occaecati.

# Et sint et. Ut ducimus quod nemo ab voluptatum.'And Mimemsis:

from mimesis import Person

from mimesis.locales import Locale

from mimesis.enums import Gender

person = Person(Locale.EN)

person.full_name(gender=Gender.FEMALE)

# Output: 'Antonetta Garrison'

person.full_name(gender=Gender.MALE)

# Output: 'Jordon Hall'Reproducible analytical pipelines

A reproducible analytical pipeline (RAP) is a series of steps that trigger one after the other to produce an analysis from data ingestion all the way to the end product. It’s a form of automation. To illustrate why there are big efficiency gains to be had with them, let’s first look at an end-to-end process that doesn’t use RAP.

Getting a bad RAP

Let’s take a high-stakes analytical pipeline that has lots of potential for improvement and efficiencies—but which is extremely common in the wider public sector. Imagine a process for publishing some key economic statistics that looks like this:

- Statistical office publishes new data in an Excel file

- An analyst downloads the file onto a network drive

- Analyst checks the file by eye and copies specific cells into another Excel workbook that has the analysis

- Analyst drags out the equations behind the cells to incorporate the new data

- Values from the calculations get manually typed into a third spreadsheet, which also has a tab with sensitive data in

- The results then get manually copied into a Word document for a report

- The final calculations tab from the third spreadsheet is published externally, alongside the report

Apart from being heavily manual, this process has multiple failure points:

- Perhaps there’s an earlier, unrevised version of the same file already on a network drive and that is used by mistake in step 3.

- Excel messes with data—it changes dates and names, and data types

- Excel doesn’t support larger datasets, so rows are missed

- Manual copy-paste errors

- Equations are different in different cells, and the analyst doesn’t notice because equations are hidden

- Calculations break if someone adds/removes rows

- Cannot see if there are changes in the calculations since last time the spreadsheet was run

- Time consuming manual checks

- Any mistake in step 1 means redoing all steps manually again

- High risk of the sensitive data being published alongside the other data

Perhaps you think this is all a bit implausible. Sadly, it isn’t! Here are some real-world examples of where Excel-based pipelines have gone wrong:

- On Thursday, 3rd August 2023, an FOI request was made to the Police Service of Northern Ireland (PSNI). It asked for the “number of officers at each rank and number of staff at each grade”. PSNI sent back not just the numbers of officers at each rank in the spreadsheet they returned but every employee’s initials, surname, and unit—even those working out of MI5’s Northern Irish headquarters. Sensitive information on 10,000 people was released by mistake, because of an extra tab in the Excel file.

- There’s the infamous case of the UK’s Covid Test and Trace programme. Civil servants were tracking cases in an Excel spreadsheet that was limited to 65,000 rows, and thus missed a bunch of infections, not to mention crucial links in the spread of the disease. Excel simply ran out of numbers. Work by economists Thiemo Fetzer and Thomas Graeber has shown that this probably led to an extra 125,000 COVID infections.

- Hans Peter Doskozil was mistakenly announced as the new leader of Austria’s Social Democrats (SPÖ) because of a “technical error in the Excel list”.

- One government department published a spreadsheet that still had a note in it that said “Reason for arrest data is dodgy so maybe we shouldn’t publish it.”

- Mathematical models written in Excel are especially vulnerable to mistakes. In a paper that was highly influential, economists Carmen Reinhart and Ken Rogoff found that economic growth slows dramatically when the size of a country’s debt rises above 90% relative to GDP. But the formula in their spreadsheet column missed out some of the countries in their data. A student found the error, and realised that removing it made the results less dramatic.

- The Norwegian Soveign Wealth Fund’s 2023 Excel snafu saw it miscalculate an index due to a “decimal error”. The mistake cost 92 million dollars.

- Back in 2012, J.P. Morgan went even further and racked up $6 billion of losses in part due to a “value-at-risk” model being manually copied and pasted across spreadsheet cells.

There are many more examples: you can find a list at the delightfully named European Spreadsheet Risks Interest Group. Now, good reproducible analytical pipeline (RAP) practices cannot protect you from mistakes, but reproducible analytical pipelines can substantially lower the risk of errors while also saving time via automation.

Good RAP

What does a good RAP look like? There are a number of technologies and practices that can help create a RAP that avoids mistakes and saves tons of time and labour to boot. Some of these RAP technologies and practices are listed below in, roughly, the order in which they would be used in a data pipeline.

- Use an API to programmatically access the latest version of the data

- Keep a copy of the raw, downloaded version of the data so that you can always come back to it. Use datetime versioning to keep track of download time.

- Use data validation checks to ensure that the data looks how you expect it to on receipt

- Use code to process the data, rather than Excel spreadsheets. And use code7 that is:

- under version control, so that it is completely auditable and all changes are logged

- is well-formatted and linted, perhaps by a tool such as Ruff.

- has pre-commit enabled with hooks that check for data, secrets, and other sensitive information submitted by mistake, and which auto-checks quality

- has tests so that everyone feels assured the code is doing the right thing

- subject to pull requests whenever it changes, so that multiple people sign off on important code

- is tested, etc., using runners, eg GitHub Actions

- is reproducible, including the set of packages and version of the language (eg via a

pyproject.tomlfile and use of a tool like uv) and ideally for which the operating system is also reproducible too (eg via Docker)

- Use a data orchestration tool to execute the entire code processing pipeline from start to finish. Some popular orchestration tools are Apache Airflow (first generation) and Dagster and Prefect (both second generation). See below for an example of Prefect in action. Orchestration flows can be triggered on events, for example if a new file lands in a folder, or on a schedule. (An easier way in to execute-code-on-event for small, local problems is via packages such as watchdog which watches for file changes and takes actions.)

- If there is a big overhead to using big data systems, use DuckDB for processing fairly large datasets locally (say, up to 1TB). Don’t like writing SQL queries? Don’t worry no-one does—but there are packages that can help.

- Store the cleaned data or results in a database.

- Use anomaly detection techniques, etc, on the created statistics to check that they are plausible. (With a human in the loop!)

- Build an API to disseminate the created statistics

- Create the report using a tool which can reproduce the same charts via code each time, eg Quarto, which can execute code chunks and be used to produce Word documents, HTML pages, PDFs, and even websites. (I wrote a short guide here.) Oh yeah, it does Powerpoints and Beamer presentations too.

7 Many of these code features are present in the cookiecutter Python package; blog on that available here.

Just as an aside, here’s how to orchestrate a simple data pipeline that runs every hour with Prefect:

# Contents of flow.py

from typing import Any

import httpx

from prefect import flow, task # Prefect flow and task decorators

@task(retries=3)

def fetch_stats(github_repo: str) -> dict[str, Any]:

"""Task 1: Fetch the statistics for a GitHub repo"""

api_response = httpx.get(f"https://api.github.com/repos/{github_repo}")

api_response.raise_for_status() # Force a retry if not a 2xx status code

return api_response.json()

@task

def get_stars(repo_stats: dict[str, Any]) -> int:

"""Task 2: Get the number of stars from GitHub repo statistics"""

return repo_stats['stargazers_count']

@flow(log_prints=True)

def show_stars(github_repos: list[str]):

"""Flow: Show the number of stars that GitHub repos have"""

for repo in github_repos:

# Call Task 1

repo_stats = fetch_stats(repo)

# Call Task 2

stars = get_stars(repo_stats)

# Print the result

print(f"{repo}: {stars} stars")

# Run the flow

if __name__ == "__main__":

show_stars([

"PrefectHQ/prefect",

"pydantic/pydantic",

"huggingface/transformers"

])This flow can be executed on a schedule by running the below (assuming that you have already set up a worker pool—see the Prefect documentation for more):

from prefect import flow

# Source for the code to deploy (here, a GitHub repo)

SOURCE_REPO="https://github.com/prefecthq/demos.git"

if __name__ == "__main__":

flow.from_source(

source=SOURCE_REPO,

entrypoint="my_workflow.py:show_stars", # Specific flow to run

).deploy(

name="my-first-deployment",

parameters={

"github_repos": [

"PrefectHQ/prefect",

"pydantic/pydantic",

"huggingface/transformers"

]

},

work_pool_name="my-work-pool",

cron="0 * * * *", # Run every hour

)I share this to show that automation of code doesn’t have to be super complicated—with the cloud, it’s achievable in a few lines!

There’s a lot in the list above. But doing all of the above not only massively reduces the risk of mistakes, it also makes it far easier to find mistakes when they do occur. And, the automation saves masses of time—allowing analysts to focus where they can add the most value.

You can find more on reproducibility and RAP here and here, and here in the context of research.

Finally, I realise that having people with the skills to do RAP to this standard is difficult and potentially expensive in itself. The market clearly recognises the benefits too, which is why data scientists are in such high demand! For me, overall, this is a much better way of doing things and even if the input costs are potentially higher, the output quality is likely to be significantly higher—so I contend that you still win out on productivity overall.

Technical communication and code documentation

This section is significantly more technical than the others and some readers may want to skip it and go straight to Model Behaviour.

I already covered the efficiencies that could be unlocked by obsessively using documentation in a previous post on efficiency. Many of the same benefits apply from documenting code obsessively too. As a reminder, those benefits include faster onboarding, having a single source of truth, making it easy to avoid stale documentation, more auditability, less likelihood of (in this case) code being used incorrectly, and, if the documentation is shared, transparency.

However, there are some extra actions you can take with the documentation of code to squeeze every last drop of efficiency out of these benefits.

At a minimum, each code repository should have a markdown README file that sets out what it is about and how to use it.

The code itself should have sensible comments, and use informative variable, function, method, and class names. Functions, classes, and methods should have docstrings (comment blocks that say what the object does.) I tend to use the Google-style docstring conventions, defined in Google’s Python style guide, but there are other docstring conventions around. Here’s an example of Google-style docstring on a function:

def _create_unicode_hist(series: pd.Series) -> pd.Series:

"""Return a histogram rendered in block unicode.

Given a pandas series of numerical values, returns a series with one

entry, the original series name, and a histogram made up of unicode

characters. However, note that the histogram is very approximate, partly

due to limitations in how unicode is displayed across systems.

Args:

series (pd.Series): Numeric column of data frame for analysis

Returns:

pd.Series: Index of series name and entry with unicode histogram as

a string, eg '▃▅█'

"""It’s not just helpful for people looking at the innards of the code, it also helps if people are using functions and methods from the code in other code. If someone were to call help(_create_unicode_hist) then they would get the docstring back. In sophisticated development environments like Visual Studio Code, they would get a pop-up of this docstring even when just hovering their mouse over the function.

For critical code, you may want to consider having code type annotations, aka type hints too. The code example above has those: in this case series has type pd.Series and the function returns pd.Series too (this is what -> pd.Series does.) Type hints are just that, hints to help people understand what types of object are supposed to be going into and out of modular parts of code like functions and methods. Because they are hints, they’re included in this section on documenting code—adding type hints helps others understand what’s supposed to happen.

If you’re really concerned about making sure the code is not used incorrectly, you can even enforce type hints, either at run time, so that if a user runs the code with the wrong data types an error is thrown, or at development time, so that the developer gets a warning that the internals of the code don’t adhere to the given type hints. typeguard is a popular package for run-time type checking, while for development time (aka static) type checking, Mypy and Microsoft’s Pyright are the biggest libraries around.

But you can go further! You’ll notice that the type annotations are, in effect, repeated in both the docstring and in the function signature. This means they could say different things, which would be confusing to users. Have no fear, though, because there’s a package for that (there always is): Pydoclint trawls through your docstrings and type annotations and tells you if they diverge.

But you can go even further! Let’s say you want to help people understand, practically, how to use a function, method, or class. Well, you can also add example code to docstrings. Here’s the format:

def skim(

df_in: Union[pd.DataFrame, pl.DataFrame],

) -> None:

"""Skim a pandas or polars dataframe and return visual summary statistics on it.

skim is an alternative to pandas.DataFrame.describe(), quickly providing

an overview of a data frame via a table displayed in the console. It produces a different set of summary

functions based on the types of columns in the dataframe. You may get

better results from ensuring that you set the datatypes in your dataframe

you want before running skim.

Note that any unknown column types, or mixed column types, will not be

processed.

Args:

df_in (Union[pd.DataFrame, pl.DataFrame]): Dataframe to skim.

Raises:

NotImplementedError: If the dataframe has a MultiIndex column structure.

Examples

--------

Skim a dataframe

>>> df = pd.DataFrame(

{

'col1': ['Philip', 'Turanga', 'bob'],

'col2': [50, 100, 70],

'col3': [False, True, True]

})

>>> df["col1"] = df["col1"].astype("string")

>>> skim(df)

"""where you can add as many examples as you like.

Now this is helpful in itself because it guides users as to how to use a bit of code. But, again, what if the examples were written 2 years ago and the code has changed? There’s a package for that! Using a package called xdoctest you can, you guessed it, test that any examples in your docstrings actually run and do what the docstring says they will do.

This is all very nice, but lots of people don’t want to go trawling through code. They want to read a glossy guide. The problem with guides, though, is that they’re often left to go stale while the code itself changes. You may have seen situations where there’s an Excel-based model and somewhere, floating around, a Word document explaining what that Excel model does. But who knows how much they are conjointly kept up to date?

Fortunately, there’s a way to build documentation that sits with your code, and is in lock-step with it. The technology is called Quarto, and it’s a flexible document publishing system (where “document” includes websites and slides!) Perhaps the greatest power of Quarto is that you can put chunks of code into the source that gets compiled into a document and, in that final product, the outputs from the code will appear too (and only the outputs if you wish.) This means you can create documentation with Quarto (in the form a Word document or website) and keep the source files for that documentation in exactly the same place as your code. Even better, you can include examples of using the code in the documentation and you know it will be exactly the same code as in your software, and that it will work—because if it doesn’t, the documentation simply won’t build!

But wait… you can go even further! You’ve spent all of this effort on lovely docstrings that tell people about how the modular parts of the code work and are meant to be used, and perhaps you even have examples in there too. What if you could automatically include all of that nice within-code documentation in your actual documentation? There’s a package for that! The massively underrated Quartodoc allows you to automatically pull docstrings from your code base and insert them into your documentation. It really is efficient, helpful, and a lot of fun too.

For an example of this self-documenting behaviour in action, check out the website for my package Specification Curve or for econometrics regression package Pyfixest. In both cases, the writing is interspersed with examples of running real code from the packages. For both websites, there’s a reference page where parts of the code are automatically documented using Quartodoc.

A lot of this is overkill for a lot of projects. But for complex analysis code on the critical path to a major publication that your institution produces? It’s a huge help and likely worth it.

Model Behaviour

When it comes to models, there are a lot of bad practices around. For me, the most worrying is that most models are not versioned. This means that if a decision was taken, or an analysis performed, with a model at time \(T=t\) and it’s now time \(T>t\) and the model is different, you’ve very little chance of getting back to the original model. Yes, this is true even if you’re using version control. That’s because a model is composed of three things: the code, the data, and (often) the random numbers used. You should version control code, and ideally you’d set the seed of your random numbers in that code too. But few people version data, so even if you have code and random numbers under control, you’ll need to version models.

Why does this matter for efficiency? Not knowing why some part of your analysis is why it is, and having to dig back through and do detective work, is very time-consuming when it is needed. And it happens fairly often with models. A classic example would be if you were asked to do a retrospective forecast evaluation—how did the model you used two years ago perform? Has performance degraded since then? Additionally, you can test whether changes you’ve made to the model’s algorithm are actually improving its performance. So investing in model versioning can reduce time costs further down the line.

A second part of model efficiency is continuous integration and continuous deployment. Enough has been written elsewhere about what these are that I’ll give the briefest summary of what they mean in this context. Continuous integration for models could involve automatically evaluating performance, or other metrics, when a new model is created. Continuous deployment would mean that when a new version of a model is ready, it automatically gets served up as an API.

The latter idea, that models get served up as an API, is really important for efficiency. Today, if I’m in department X and I want to use a model in department Y to get predictions, I have to email someone in department Y, get them the permissions for the data that will form the input and then the data itself, and persuade them to take time out of whatever else they were doing to run it! If the model is deployed, I skip all that, and can work asynchronously—I simply use my data to ping the API and get the prediction straight back into my analytical tool. There’s more about asynchronous working and its efficiency benefits here.

There is a way to get most of these benefits pretty easily on the cloud using a model registry: a repository for privately storing models and their versions (from which those models can also be deployed.) I wrote at length about model registries here and, rather than repeat myself here, I’ll defer the reader to that post for more information.

Artificial Intelligence (AI)

A former boss of mine, now even more heavily invested in the AI world than he was when we worked together, recently told me “don’t have an AI strategy, have a business strategy that makes use of AI.” It stuck with me, partly because there are so many AI strategies around, but mostly because he’s right: AI is a tool and not a business plan. We don’t have a keyboard strategy or an email strategy.8

8 Though now I think about it, perhaps there’d be some merit to the latter?

That said, AI is also quite new and people are still thinking through how it can be used to improve efficiency. So, in this section, I offer a few ideas around using AI to improve analysis and operations. (In the previous post in this series, which covered communication and co-ordination, some AI uses also featured—for transcription, meeting summarisation and minutes, and for searching the stock of institutional knowledge.)

A huge one is as a coding aid. GitHub Copilot is the leader, and large-institution-friendly in the sense that it’s all owned by Microsoft. I’ve been hugely impressed by, for example, Claude 3.7 Sonnet, as a back-end model. Whether you’re a new developer and need a chat with an artificial coding expert, or an experienced developer who knows what they need and can prompt the AI in the right way, coding aids are a huge benefit—though they are not trivial to use and there is a learning curve. It’s also very possible that, in the near future, AI models will be able to look at issues and raise pull requests without intervention. Coding aids are also helpful in a world where data science talent is scarce. The prolific Simon Willison has written about how he uses LLMs to help him write code, and there are likely few guides as out there today as good as his.

Another area where AI is particularly helpful with efficiency is review and critique. As a manager, you do often end up saying the same kinds of things over and over again—whether that’s about a note, or code, or a presentation. What if I as a manager had a set of prompts that could precede content by staff in a large language model (LLM) query and which gave them a helpful first cut of what I would have said anyway? That means our time together as two humans reviewing the work can be focused on the less obvious, more subtle, and frankly more interesting questions and issues. A simpler way to do this is for the managee to just ask for a general review. If you do research, LLMs also do a reasonably good job of acting as practice reviewers.

One area of LLMs that is likely to grow is auto-analysis. Instead of me as an analyst having to come up with, say, SQL queries to explore the data, I just say to the LLM, start exploring these data and tell me about anything useful. It doesn’t have to be analysis, it could be coding some software up too. Quite a few models now have code execution capabilities, and consumer-facing products in this space will only grow over time. This is going to democratise who can do analysis, which is a good thing, and will be especially helpful for senior leaders who want to make quick queries.

I’m fairly sure I don’t believe it9, but the theory that geniuses stopped appearing because we stopped educating a handful of people with uber-elite tutors (aka aristocratic tutoring) has always stuck with me. However, you don’t have to believe in the hard form of this thesis to think that having an extremely good tutor who is available 24/7 would be helpful for learning. Well, let’s now imagine that everyone in the public sector can have access to the most knowledgeable (if not always the smartest) tutor on the planet! The possibilities for (self-)teaching using LLMs are strong. I’ve found that I can simply say, “write some questions to test me on X”, or “prepare a lesson on Y”, and get something very sensible.

9 Many others aren’t convinced either.

My final category of ways that AI can immediately help with public sector efficiency is perhaps the most obvious one: improving analysis directly. I wrote a lot about the ways that data science more broadly can help with efficiency in my post on data science with impact, so I’ll refer you to that to find out more what the possibilities are in this space. I also have a recent paper and book chapter out on this very question too, called “Cutting through Complexity: How Data Science Can Help Policymakers Understand the World”, which is chock full of examples.

Automating and organising the office

Automation with code

On this blog, we like code, because it’s transparent, usually it’s FOSS, it’s auditable, and it’s reproducible. So, where possible, it’s best practice to automate with code. What kind of office tasks can be done with code?

In the private sector, tools like Slack—with their very powerful code integrations—seem to be more dominant. However, a lot of public sector organisations use Microsoft and its suite of office tools. These are, happily, scriptable with code. In particular, Microsoft has tools that aid with automating the use of its office software, including:

- Microsoft Authentication Library, for authentication with Microsoft Azure Active Directory accounts (AAD) and Microsoft Accounts (MSA) using industry standard OAuth2 and OpenID Connect. Basically, this logs you in to Microsoft services when using code.

- Microsoft Graph API, for interacting with cloud-based Microsoft software products (eg Outlook.) Although it’s an API, there is an associated front-end Python package too, called Microsoft Graph SDK. There’s also an interactive Graph Explorer tool.

Third-parties have built other, simpler-to-use packages on top of these. A notable example is the Office365-REST-Python-Client. That page has lots of examples but, just to give you a flavour, here’s how to send an email in code:

from office365.graph_client import GraphClient

client = GraphClient(acquire_token_func)

client.me.send_mail(

subject="Meet for lunch?",

body="The cafeteria is open.",

to_recipients=["hungryfriend@microsoft.com"]

).execute_query()There’s support for doing operations with Outlook, Teams, OneDrive, Sharepoint, OneNote, and Planner (a task manager).

Perhaps a couple of examples would give you a sense of how this can be useful.

Imagine you have a RAP that produces a report, as discussed above. Great, you have a report, now what? Now you can use code to do that last step and email it out to stakeholders or put it in a Sharepoint folder.

Another example: like me, you work with your team on a Kanban board where code tasks are assigned to people. You could have a GitHub action set up so that each time a name is assigned to a card/issue on that Kanban, a task is also sent to that person’s Planner app.

One more example: people are always emailing documents to read. As noted in the previous post though, email is a bad way to communicate source information. Instead, you can have a code setup to watch a Sharepoint folder for any new documents dropped into it. When a new document arrives, the people who need to read the document get a notification that it’s there waiting for their review.

If you’re on Google Docs, there’s an API for that too. And there are plenty of other office efficiency code tools not from the big tech firms. Here’s a fairly random selection:

- how about good quality, person-labelled transcripts of meetings? See this post on how someone automated their podcast transcription process with local AI (complete with diarization).

- rooting out any deadlinks

- creating QR codes

- Automation of generic interactions with your computer (aka robotic process automation)

- renaming, sorting, deleting, or moving hundreds of files at once (using tools like pathlib, watchdog, and shutil)

- Web-scraping, of course

- Scheduling tasks to happen while your computer is running

A quick example of the latter, scheduling, is:

import schedule

import time

def morning_task():

print("Good morning! Running your daily automation task...")

schedule.every().day.at("08:00").do(morning_task)

while True:

schedule.run_pending()

time.sleep(60)There are really incredible possibilities in this space.

Low or no code automation

There’s a huge barrier to automating with code, which is that most people in most organisations can’t code. Overall, for the reasons listed, code-based automation is to be preferred. However, we should not let the perfect be the enemy of the good and proprietary low or no code tools can democratise the ability to automate tasks. The most relevant example of such a tool in the public sector is Microsoft’s Power Automate, which is described as “A comprehensive, end-to-end cloud automation platform powered by low code and AI.”

Power Automate is not free, but if your institution uses Microsoft then there’s a chance that you have access to it as part of a bundle. Power Automate’s stand out feature is that you can create automated flows simply by pointing and clicking (and sometimes filling in a few details.) For the automations it covers, it’s extremely convenient. Some examples of what can be automated include:

- Sending a customised email when a new file is added to a folder on Sharepoint

- Scheduling a recurring message in a Teams chat

- Saving an email to OneNote

There are also ‘connectors’ that provide integrations with popular other services and software, including GitHub, Gmail, Slack, etc.

If you have the relevant Microsoft subscription, you can find example templates and connectors here.

Conclusion

In this post, I’ve explored a range of ideas to boost efficiency in public sector analysis and operations. Investing in better technology, embracing open-source software, data sharing, and automating analytical processes has the potential to yield significant productivity improvements. The same goes for implementing reproducible analytical pipelines, maintaining comprehensive technical documentation, versioning models, strategically integrating AI tools, and automating routine office tasks.

These practices are likely to boost productivity by being, in some cases, cheaper. But the likely biggest contribution to productivity is in reducing errors, saving time, enabling more analysis, and increasing agility.